Artificial Intelligence (AI) help us find insurance policies, work out if we’re credit-worthy, and even diagnose our illnesses.

In many ways, these systems have improved our lives; saving us time on repetitive data entry, and making accurate recommendations.

In the past few years, however, organizations from Apple to Google have come under scrutiny for apparent bias in their AI systems.

Since much AI technology is designed to take the human guesswork out of decision-making, this bias is an ironic turn—and one that can have sinister results.

“It is becoming more and more important to make certain that AI applications are unbiased, because AI is taking over and making more decisions about people’s lives,” says Dr Anton Korinek, associate professor of business administration at Darden School of Business.

As more organizations begin to implement AI, it’s crucial that tomorrow’s managers can recognize, and stamp out, these unfair biases.

Examples of bias in AI

In recent years, several biased AI systems have come to light, Anton observes.

One prominent case from 2019 saw tech giant, Apple, accused of sexism when the company’s new credit card seemed to offer men more credit than women—even when the women had better credit scores.

Steve Wozniak himself, Apple’s cofounder, tweeted a complaint that his wife was offered a lower credit limit than him despite her higher credit score. In response to multiple complaints, a US financial regulator is investigating the sexism claims.

Another, more serious case, is that of COMPAS. In 2016, it came to light that the computer program, used to calculate the likelihood of prisoners reoffending, was unfairly biased against African-American defendants.

The system predicted that black people would reoffend at twice the rate of white people, despite evidence to the contrary.

Myriad other cases speckle AI’s history—from Google’s photo app labelling black people as ‘gorillas’, to Facebook advertising high-paid job openings to more men than women.

So, what’s the explanation? How are systems ostensibly free from human error reflecting entrenched human biases?

© JHVEPhoto via iStock - Google made headlines in 2015 when its photo app AI mislabelled people as animals

What causes AI bias?

When AI goes wrong, you might be tempted to blame the algorithm itself. In reality, though, humans are the ones at fault.

“I would not assign blame to AI itself, but to the creators of the AI algorithms who have failed to ensure their application is unbiased,” says Anton.

Dr Anindya Ghose, professor of information, operations, and management sciences at NYU Stern, agrees. Bad data is usually at the root of the problem, he believes.

“An algorithm is only as good as the data it’s trained on,” he says. “To use an old data science adage: garbage in, garbage out.”

Algorithms are “data-hungry,” Anindya explains, and learn over time based on the data they are given. If an algorithm is trained on data that’s systematically biased, it will start to make decisions that are biased.

One notable example of this is the US police software, PredPol. The software uses an algorithm to predict ‘crime hotspots’, based on where previous arrests have been made.

Because black Americans are almost six times more likely to be arrested than their white counterparts, it’s likely that African-American neighborhoods appear as ‘hotspots’ unfairly often, compounding the problem in a feedback loop.

With careful consideration, AI can actually begin to tackle such bias, however. In 2019, San Francisco district attorney, George Gascón, started ‘blind-charging’ suspects.

George recruited scientists from Stanford University to build an AI system that would remove racial information from police reports. The goal is to help prosecutors avoid unintentional racial bias when they first review a case.

“AI can be biased insofar as it makes decisions based on human-generated information that contains human biases,” comments Adam Waytz, professor of management and organization at Kellogg School of Management.

“Humans are also capable of preventing bias in AI by attending to these factors,” he notes.

Tackling bias in AI

Recognizing and fixing biased data requires a specific skill set, says Anindya, and as training grounds for future managers, business schools have a role to play.

“We definitely have a growing need for more quantitative managers,” he notes. “Managers who are comfortable and conversant in data.”

Anindya acts as academic director for the MSc in business analytics at NYU Stern. On the program, students have plenty of opportunities to practice ‘cleaning’ data to improve the accuracy and fairness of AI systems.

“We have several courses where we train students to eliminate biases from data as much as possible, “ he explains. “This involves teaching them to use tools such as Python programming, and big data infrastructures.”

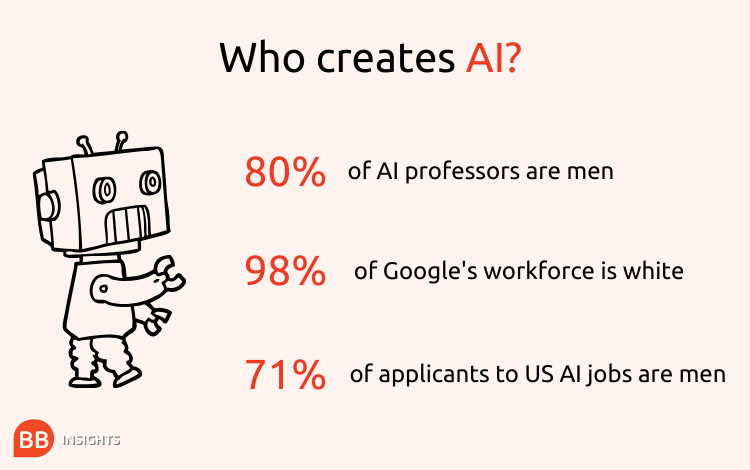

Anindya believes that, in the vast majority of cases, biased algorithms are created unintentionally. Part of the reason that it doesn’t get spotted is the fact that, for the most part, high profile algorithms are designed by teams of white men.

According to a 2019 study by New York University, 80% of AI professors are men, while AI giants Google, Microsoft, and Facebook all employ a workforce that is over 90% white.

Source: National Science Board

In this homogenous environment, it can be easy for biases against other demographics to slip through the cracks.

Whatever the cause of the bias, it’s crucial that organizations take responsibility.

“If there’s conclusive evidence that there’s bias in the data in the algorithm, the onus is on the company to show they were unaware of the biases, and systematically clean the data,” says Anindya.

In other words, despite increasing automation, AI still requires human oversight to ensure it’s developed and deployed fairly. This is something that human managers must be aware of.

According Brian Uzzi, professor of leadership and organizational change at Kellogg, it may be some time before developers and managers can leave their algorithms unchecked.

“To minimize or adjust an AI system's predictions for possible bias, special care needs to be placed on using data that reflects the diversity of the phenomena under study, using objective tests that detect bias, and incorporate human interpretations of the predictions,” he concludes.

As AI technology continues to enter new areas of our lives, it’s crucial that developers and implementers make a conscious effort to compensate for biased data. Ensuring that a diverse group of people are consulted as algorithms are created and tested will clearly be an important place to start.

BB Insights explores the latest research and trends from the business school classroom, drawing on the expertise of world-leading professors to inspire and inform current and future leaders